GPT and friends

I’m trying to figure out GPT and generative AI. I’ve not got it figured out yet. At the moment I’m excited about the fundamental advances in AI and inevitable business model disruptions and opportunities, but I’m more (or is it less?) luke warm on ChatGPT. If I can avoid another poem about ERP, I’ll die happy.

I rather like this piece from Ian Bogost in the Atlantic.

Perhaps ChatGPT and the technologies that underlie it are less about persuasive writing and more about superb bullshitting.

Generative AI not without negative externalities, as this article from Time notes.

“They’re impressive, but ChatGPT and other generative models are not magic – they rely on massive supply chains of human labor and scraped data, much of which is unattributed and used without consent,” Andrew Strait, an AI ethicist, recently wrote on Twitter. “These are serious, foundational problems that I do not see OpenAI addressing.”

Meanwhile in Davos

One of our portfolio companies, Techwolf, is pretty deep into all things AI. The founder, Andreas was at Davos. Together with Jeroen, his cofounder he wrote up a piece about ethical AI.

An AI tool is only as good as the data sources it works with. Give it inaccurate data and you’ll get inaccurate results. AI, working at scale in your organization, can drastically increase bias if it is modelled on biased or incomplete data. Before you start giving your AI skills data to train on, you must audit your data to ensure it's as accurate and representative as possible.

Ultimately, not all of your data will be objective or equitable. For example, in our work at TechWolf, we’ve discovered that male employees tend to over-report their skills, whereas female employees tend to under-report them. If you fail to understand such contextual factors when using skills data, you’ll find that your system excludes important talent. Undermining the entire point of the skill-based organization.

Speaking of Davos, Azeem Azhar was there. I often read his newsletter, Exponential View, and listen to his podcast. He is a brilliant interviewer, and it is brim full with insights and links to other smart people.

Nicolas Anderson, the editor of the Atlantic magazine, has been quite measured on the positives and negatives of ChatGPT. I thought his surmising on the regulatory context of open.AI v Google etc very insightful, picking up on how the threat anti-trust might actually be working. The Atlantic coverage of AI has been excellent so far.

Mapping the landscape

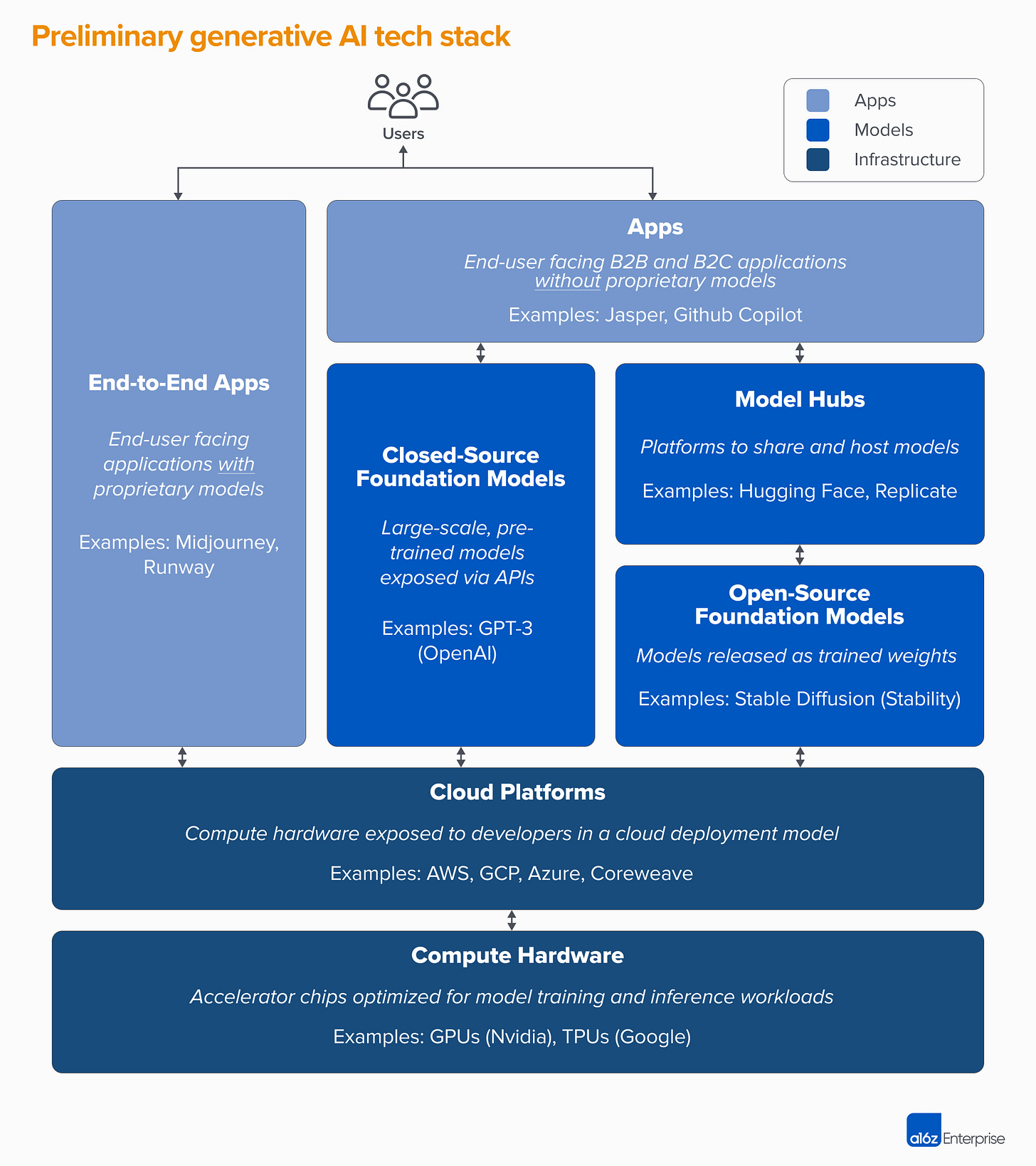

A16Z have published their map of generative AI. (h/t Azeem) I’m going to spend more time digesting this. While there are moments of frothy hyperbole, it outlines the different layers of the stack and the various business opportunities really well.

Based on the available data, it’s just not clear if there will be a long-term, winner-take-all dynamic in generative AI.

This is weird. But to us, it’s good news. The potential size of this market is hard to grasp — somewhere between all software and all human endeavors — so we expect many, many players and healthy competition at all levels of the stack. We also expect both horizontal and vertical companies to succeed, with the best approach dictated by end-markets and end-users. For example, if the primary differentiation in the end-product is the AI itself, it’s likely that verticalization (i.e. tightly coupling the user-facing app to the home-grown model) will win out. Whereas if the AI is part of a larger, long-tail feature set, then it’s more likely horizontalization will occur. Of course, we should also see the building of more traditional moats over time — and we may even see new types of moats take hold.

At the risk of stating the obvious, I suspect that GPT and similar technologies are going to be much more useful and less dangerous when applied to carefully curated datasets. ChatGPT serves to excite and entice.

The ethics and regulatory implications are going to get very interesting. More on that another day.

On exams

ChatGPT does have skillz for passing exams, having passed the medical licensing exams, and a Wharton business School exam. I sense though, that is as much a reflection of the limitations of exams to assess understanding than it is on the brilliance of ChatGPT. Exams will adjust. One of our portfolio companies, Copyleaks, has already launched a detection tool, so I think the cheating problem is going to be solved pretty quickly, the more interesting challenge will how learning, both corporate and academic, adapts to its usage. In our investment universe, corporate learning is going to get a bit of shake up.

Microsoft and business models

Next time I’m in Berlin I really need to spend some time with Gianni. In the meantime, his take on Microsoft and Open AI is well worth a read. He explores:

A modern AI-first strategy requires us to step away from thinking of OpenAI as a chatbot or image generation software, or as a souped-up search or knowledge manager. A better market segmentation could be based on this question: if monetizable value is generated not by a single person, but rather by an organization, or an ecosystem, how could the synergy between Microsoft and OpenAI unlock it? How would AI support it?

One of my favourite academics to read on tech and innovation is Michael Jacobides. His work on ecosystems really helped me think differently about how the software industry works. You should look him up on google scholar. Here’s an excerpt from his making sense of what’s happening in tech post.

For all the stunning ability of ChatGPT to emulate conversational language, though, its content remains fairly rooted in the data that is already out there – which is fiendishly hard to evaluate and assess, and fraught with misinformation and misconceptions. So, while GPT can sound smart and competent, it remains rather like the executive who rises through the ranks on a strategy of “fake it till you make it.” While chatboxes of this sort will certainly ease customer engagement, we are no further forward in terms of making decisions and automating processes – and further testing and development is unlikely to overcome these inherent limitations.

His next paragraph hits on what is going to be really interesting about generative AI, namely the business models.

Now, to understand which professions, activities, or sectors will be disrupted by generative AI, we will need to see how this new technology, resource- and capital-hungry and therefore concentrated, will be monetized.

1966 and all that

It is probably time to reread one of computer science’s greatest books, Joseph Weizenbaum’s Computer Power and Human Reason. He built what was probably the first chatbot, Eliza. He published a paper about it in 1966. It crudely mimicked a psychotherapist, and he was shocked how people were taken in by it.

What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.”

― Joseph Weizenbaum

It is heartening to see the Eliza effect gaining new attention.

I’m going to keep reading and learning about generative AI. I hope to avoid delusional thinking, but I’ll keep an open mind. What are you reading this week?

Thomas Otter’s exploration of GPT and generative AI is both insightful and thought-provoking. He highlights the transformative potential and ethical challenges of these technologies. The discussions on data accuracy, AI bias, and regulatory implications are particularly relevant. As AI continues to evolve, understanding its impact on business models and society is crucial. Keep the updates coming!

Another cool Generative AI app/site/platform/whatever we're calling it is Looka.com. It is generating logos and branding. I used it recently for the rebrand of my Climate Confident podcast and was delighted with the output tbh