AI again: Felin and Holweg. Theory Is All You Need: AI, Human Cognition, and Decision Making.

The most thought-provoking academic paper I've read in ages.

I have absolutely no idea if we will achieve AGI any time soon or even ever. Come to think of it I’m not sure that it is even an appropriate goal for us to be aiming for. I’d been thinking there is more to intelligence than merely the ability to do better and better predictions, which is what AI does. While generative AI is achieving all sorts of incredible breakthroughs in medicine, business and more, I have a nagging feeling that it is derivative rather than truly generative.

I read a paper this weekend by Teppo Felin and Matthias Holweg. It was only published on SSRN last week, and has not yet landed in a journal. I expect it will make quite an impact when it does.

Felin, Teppo and Holweg, Matthias, Theory Is All You Need: AI, Human Cognition, and Decision Making (February 23, 2024). Available at https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4737265

Felin and Holweg are neither techno-optimists, nor techno-pessimists. And while Felin is a business school prof, he has spent a lot of time exploring biological evolution and philosophy, so he brings a nuanced perspective. I’ve got to know him over the last few years, and his earlier work oh value has influenced my investment strategy. Holweg also leads the excellent and very successful Oxford AI course.

Instead of doing all those important things on my to do list, I headed to my local cafe, re-read it, hacked up a few excerpts from the paper and added some of my own comments. This isn’t really a proper review, rather my own attempt to process the paper, and involves some over-simplification.

It is the most interesting and important paper on AI that I’ve read in ages, and it has helped me resolve some of my own internal debates about generative AI.

They coherently articulate:

The impressive progress that AI has made in the last few years, and the remarkable power of AI to perform cognitive tasks, (is) often better than people can do, in part because of the imperfections of human “bounded rationality”.

They disagree with the assumption that “machines and humans are (both) a form of input-output device, where the same underlying mechanisms of information processing and learning are at play.”

They argue that “human cognition is forward-looking, necessitating data-belief asymmetries which are manifest in theories.” This is the important bit.

And “an LLM—or any prediction-oriented cognitive AI—can’t truly generate some form of new knowledge. The “generativity” of these models is a type of lower-case-g generativity that shows up in the form of the unique sentences that creatively summarize and repackage existing knowledge.”

“The idea of LLMs as “stochastic parrots” or glorified auto-complete, underestimates their ability. Equally, ascribing an LLM the ability to generate new knowledge overestimates its ability.”

They then go on to apply this to a couple of thought experiments and then extend it to strategy in a business context.

Galileo and the Wright Brothers

I really liked the two thought experiments

The first one looks at heliocentricity:

Imagine an LLM in the year 1633, where the LLM’s training data incorporated all the scientific and other texts published by humans to that point in history. If the LLM were asked about Galileo’s heliocentric view, how would it respond?

As the LLM has no grounding in truth—beyond what can be inferred from text—it would say that Galileo’s view is a fringe perspective, a delusional belief.

Theory-based causal logic and flight

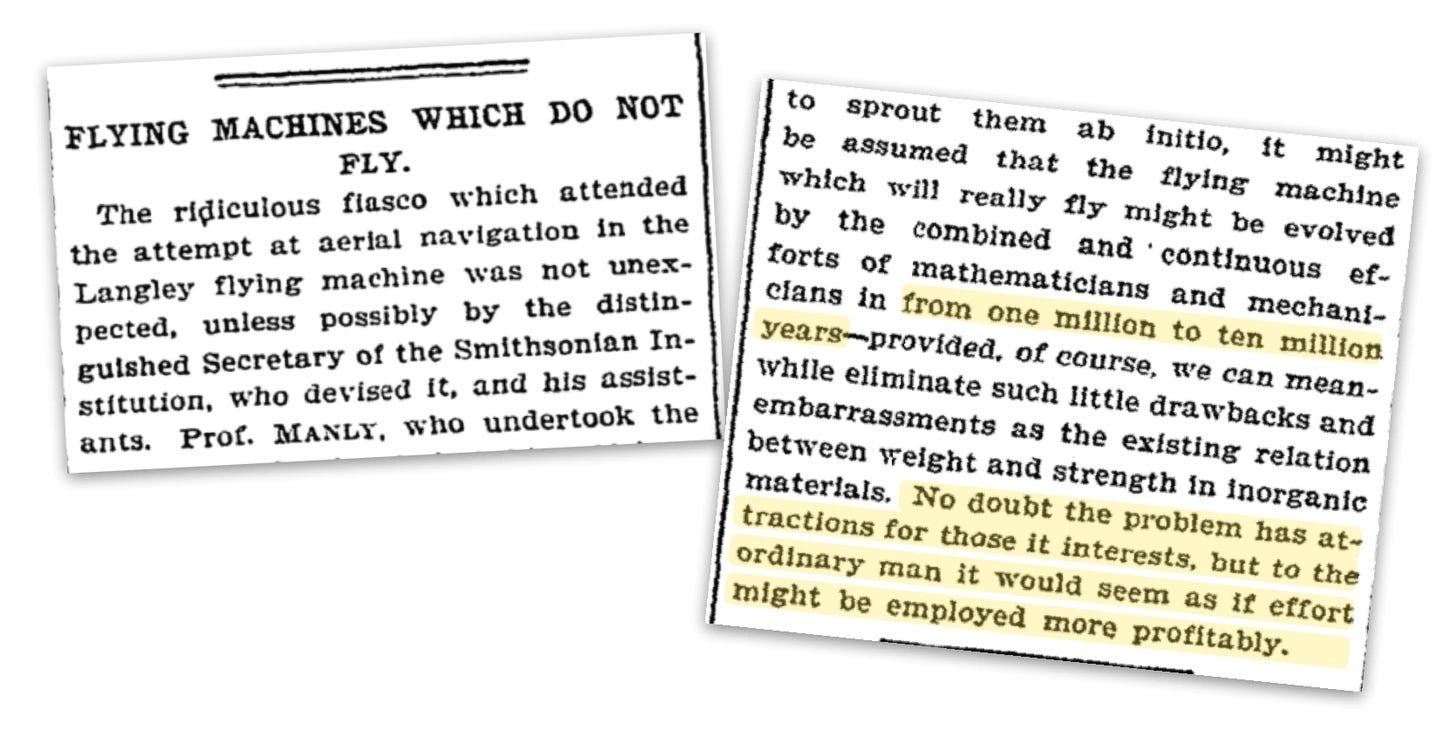

It is easy to forget that the scientific expert community almost universally considered heavier than air flight impossible. Lord Kelvin and many others considered it impossible. In 1903 the New York Times stated it would take the “combined and continuous efforts of mathematicians and mechanicians from one million to ten million years” to achieve human powered flight.

The authors explore how the Wright brothers took a belief about heavier than air flight, and broke it down into three problems: lift, propulsion, and steering. They figured if they could solve for those three, they would be able to fly. They then tried and documented multiple experiments to explore these problems.

Their success was not just in achieving flight but in demonstrating how a systematic, intervention-based approach can unravel the causal mechanisms underlying complex phenomena, and overcome the shortcomings of existing data.

I’d suggest if the Wright brothers had an LLM with all the knowledge about flight to date as of about 1900, it would have told them to stick to bicycles.

“Heterogeneity in beliefs is seen as problematic. In many instances, it is. But heterogeneity in beliefs— belief asymmetry—is also the lifeblood of new ideas, new forms of experimentation, and new knowledge, as we discuss next. Our goal here is simply to highlight that this positive form of data-belief asymmetry also deserves consideration, if we are to account for cognition and how human actors counterfactually think about, intervene in, and shape their surroundings.

Computational models of information processing offer an omniscient ideal that turns out to be useful in many instances, though not in all instances.

The limits of prediction in business strategy and the need for a theory.

AI is in essence a mechanism for prediction. Most of the top AI theorists, AI practitioners and tech bros argue this. In the vast majority of cases, predictions based on past data is a very effective way to make decisions and run your business. Many of the decisions that we make can be done more effectively, quickly and precisely by AI. The purpose of prediction is to minimize surprise and error. For much of business this is a good thing. Often AI enables us to do a far better job of synthesising the past than our own flawed memories can.

Felin and Holweg don’t dispute this, but they argue that this misses the point of what strategy is about. Strategy involves focusing on those things that maximise surprise and error, those things that evade prediction.

In a strategy context, the most impactful decisions are the ones that seem to ignore (what to others looks like) objective data. In an important sense, strategic decision making has more to do with unpredictability than prediction.”

If almost everyone is using the same set of data and similar AI techniques, and simply use that to drive strategies then strategies will become homogeneous and undifferentiated, and innovation will gradually grind to halt, or be at best merely incremental.

Felin and Holweg propose that firms need to develop a hybrid approach to strategic decision making. They don’t deny the role that AI can play, but make two key points.

“Strategy—if it is to create significant value—needs to be unique and firm-specific and this firm-specificity is tied to unique beliefs and the development of a theory-based logic for creating value unforeseen by others.”

And “That said, if AI—as a cognitive tool—is to be a source of competitive advantage, it has to be utilized in unique or firm-specific ways. Our argument would be that a decision maker’s (like a firm’s) own theory needs to drive this process. AI that uses “universally”-trained AIs—based on widely-available models and datasets—will yield general and non-specific outputs. , AIs can (and should) be customized, purpose-trained, and fine-tuned—they need to be made specific— to the theories, datasets and proprietary documents of decision makers like firms—thus yielding unique outputs that reflect unique (rather than ubiquitously available) training data. It is here that we see significant opportunity for AI to serve as a powerful aid in strategic decision making.”

The recent breakthroughs that we have had in AI have been precisely because researchers and scientists have taken positions that an LLM probably would have called dumb. Yet many of those same researchers and founders now attempt to negate the importance of the “process of conjecture, hypothesis, and experimentation” in future innovations by suggesting AGI is just around the corner.

How this impacts my investing strategy

In every investment, I ask the question what do you do that others think is wrong or foolish. I’ve called it the Galileo position for some time. I’ll reinforce and refine this.

almost all start ups need a contrarian theory, where the is an asymmetry between belief and the data available to prove that theory.

AI can play a massive role in automating the conventional, be ruthless in applying to make what is non-differentiating efficient. The scope of what is non-differentiating is not constant.

Firms that succeed in applying AI to drive significant value capture will do so because they developed a theory on how to use AI that is specific to their organization. Paradoxically, commercial success with AI will require you to have a theory and not just data.

Thanks Teppo and Matthais for a fascinating paper. I look forward to the next one.

"the miracle of your mind, isn't that you can see the world as it is, it's that you can see the world as it isn't." https://www.ted.com/talks/kathryn_schulz_on_being_wrong?language=en

Central to the epithets attributed to a certain S.Jobs is one that sticks out to me each day: Ignore Everybody. Isn’t that what provides the essence of creativity or, as you view it, a contrarian position? It’s certainly what drove me to formulate a business model that drive diginomica. No LLM/AI required.