Of APIs, AI, ecosystems, regulations and people who know stuff

Another meander through research, tech, HR, regulations and our investment thesis.

I repeat this often: : If I were to have my days over as a product manager, I would have devoted more R&D resource to APIs: Sales commits and rhinos be damned. Easy to say, not so easy to do.

APIs and shifting ecosystems

APIs (application programming interfaces) are the technical enablers of ecosystems and inter-vendor (and in large vendors intra-vendor) connectivity. They are not just technical artefacts. Usually, API design and prioritisation are determined by the corporate politics of the vendor, mixed in with the competitive dynamics of the market. Nowhere is Conway’s law more evident than in API architecture. Lots of Machiavelli’s Prince too.

If we examine APIs and integration in HR Tech, we are seeing improvements in integration middleware significantly lowering the barriers to connect applications. Light-weight integrations can now be delivered by integration vendors like Merge and Kombo. The tooling for building deeper integrations has moved on dramatically too, with companies like Snaplogic and Boomi applying significantly more AI to integration. See this example. Smoother, cheaper, more reliable integrations should, in theory, enable niche vendors to compete more effectively. If you can lower the cost of integration, you make the consistency advantages of a suite less attractive. You spend your time building your features and API, rather than trying to figure out ERP integration entrails.

I’m thinking that AI is going to change the UX models too, also aiding niche vendors. The vision of the chatbot of a decade ago seems to be finally achievable. If I am able to converse coherently with the application, then the UX becomes natural language, rather than menus and radio buttons. This reduces the need for traditional UI consistency. But I’ve been disappointed with chat before.

On the other hand, data politics are going to influence a lot of API architectures going forward. We will see a lot more restrictions on what can be fed in to start ups LLM models from incumbents for instance. Established vendors are likely to use both T&Cs and technical means to gate, toll, and control what start ups can suck out. It may make the SAP 3rd party access kerfuffle or Linkedin’s API contortions seem like a warm up.

Sometimes the design and definition of the API isn’t determined by the vendor’s penchant for openness or otherwise. It is driven by regulatory or industry standards.

In order to integrate your payroll to the German health insurance authorities, you work with the good people at ITSG, There is a defined process, established by standards, regulation (and ultimately legislation), to follow. Ordnung muss sein and all that. If you try and build a German payroll without doing this integration validation, and following their API standards, you are nuts. No one will buy it.

Diagram taken from my dissertation. It looks complicated, because it is. But it gets you from the constitution to working, compliant, payroll code eventually. The little eXTRa box is where a bunch of interesting open-source stuff happens.

When evaluating investments, how the founder thinks about integration, APIs and and the various ecosystems is a critical part of our decision making. If you are pitching German payroll, then let the fun begin.

I rather like Jacobides’ definition of ecosystems. It doesn’t roll easily off the tongue, but it has depth.

An ecosystem is a set of actors with varying degrees of multilateral, non-generic, complementarities that are not fully hierarchically controlled.

By understanding ecosystem dynamics, you can get a better sense of which firms create value, and who is able to extract it. It isn’t always the same firm.

Check out more of Prof Jacobides’ work here.

Applying ecosystem thinking to HR

One of my concerns with a lot of enterprise HR departments is their almost myopic focus on employees, rather than the broader workforce. Prof Elizabeth Altman’s research highlights the importance of thinking more broadly. She does an excellent job of defining the workforce ecosystem.

A workforce ecosystem is a structure focused on value creation for an organization that encompasses actors, from within the organization and beyond, working to pursue both individual and collective goals, and that includes interdependencies and complementarities among the participants.

Our investments in Oyster and Utmost (now Beeline) align neatly with this.

On AI again, reconnecting and more regulation

I am still forming my opinion on generative AI’s opportunities and limitations. The other day I caught up with Vishal Sikka, and I share many of his concerns and excitement. Vishal was the CTO at SAP, CEO at Infosys and now building an AI company, Vian.ai. He is also on the board at Oracle, and was involved in open.ai in its very early days. Vishal did his PhD on AI, and actually met Joe Weizenbaum, probably the most important person in AI history.

You might find this talk interesting.

Vishal noted recently.

“There may only be about 20,000 to 30,000 people in the world that understand the true methods of how AI systems run. This is vastly smaller than the 52,000 or so people we estimate are MLOps professionals, or the 1 million we estimate are data scientists. Many of them could not tell you why the system is doing what it is, why it makes the recommendations it does, what could possibly run awry, or how the underlying techniques work.” via Forbes.

This means that the biggest obstacle for successful and safe AI adoption is going to people that know what they are doing. So understanding who really has the skills, building out training, and effective recruitment becomes even more critical for organizations and society. It follows that specialist venture fund precisely focused on the companies building the solutions to improve learning, recruitment, and so on will be well placed to deliver outsized returns.

As we deepen our investment thesis on AI at Acadian Ventures, I’m spending a lot of time thinking about AI regulation, and the unintended consequences they will create.

We actually have quite a lot of AI related regulation already, I’d argue that part of the problem is that the existing regulations aren’t consistently or assertively applied, as anyone following the Irish v EU data protection debacle will realise. Copyright may well turn out to be a bunfight too. (Good thing we invested in Copyleaks). See this on copyright from Ben Evans, thanks for the pointer Meg Bear. And this from the Guardian about novelists.

AI regulation is going to get more interesting. As Andrea Saltelli notes Regulatory capture is a real.

I’m following Gary Marcus’ work closely. Check out p(doom). Gary is the best antidote to AI froth.

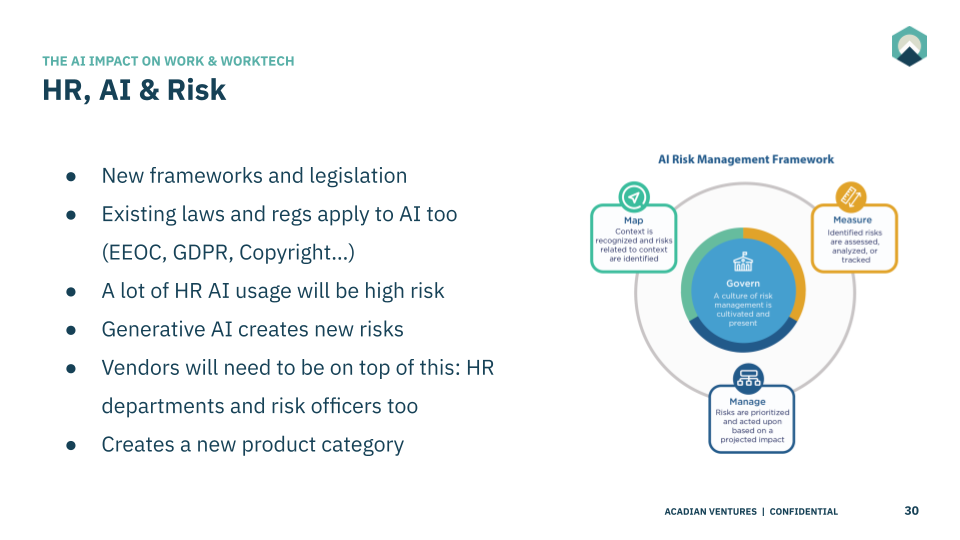

(The diagram is from the NIST AI risk framework)

The interplay between regulation and technology is rarely straightforward, as this final paper for the day illustrates.

Regulatory standards and consequences for industry architecture: The case of UK Open Banking by Dize Dinçkol, Pinar Ozcan and Markos Zachariadis.

It brilliantly shows how the process of standards creation and implementation is rarely smooth, and how the initial assumptions of the regulator don’t work out quite as planned.

We show that regulator-led standardization processes can change the architectural configuration of an industry and interorganizational dynamics within through modularization of products/services, emergence of new roles, and new relationships across different organizations. Based on our findings, we propose that standardization is a continuous, multi-stakeholder process where not only formulation decisions, but also the adjustment of industry players to roadblocks in implementation cause recalibration of standards and various shifts in industry architecture. We highlight that new ‘converter’ roles emerging to resolve the interoperability bottlenecks in an industry can subsequently change the industry architecture and blur industry boundaries by ‘converting across industries’.

It would be cool if Pinar and colleagues could meet Ian Brown and Chris Marsden. I reckon they have written the best book on software regulation, its called, helpfully, Regulating Code. I’ve read it several times, but I’m weird that way.

A good bit of I don’t know

Our investment thesis on AI at the moment involves quite a bit of don’t know. This slide is part of what we presented to LPs and Portfolio companies recently. I’ll share more soon.

As I usually do, let’s finish with a tune, with an obtuse link to the post. I’m going with the new OMD song. Great lyrics, and like AI, it sounds both new but familiar. It is a a thoughtful homage to the original Bauhaus staircase.