More learning about AI at Oxford

Navigating my own confusion matrix.

In order that we can effectively invest in AI related workforce technologies it is incumbent on us to know a bit about AI. I don’t like to invest in things that I don’t understand. Almost every HR tech pitch these days has an element of AI to it, and I need get beyond the buzzwords, look beneath the covers, even pick the scabs. I’ve devoted a chunk of 2024 to this task, and it is daunting.

This last week of May I completed the second module of the PostGrad Dip in the Business of AI. It was great to get back to Oxford. Charlotte came with me, and worked on her book. Wild mid-life we are having. We even went to a Beethoven concert. I guess I’m not the typical burning man VC persona.

Thanks again to Jason for covering the Acadian Ventures fort while I hang out in academic land.

This week built on the first module (my write up here).

Strengthening our AI thesis

Jason and I know a lot about SaaS. Not as much as Dave Kellogg, but we have some of the scars. I can bore for Germany on the nitty gritty of HR tech in the cloud. I can drone on for hours in numbing detail about technical debt and scaling pain. Jason helped build one of the most impressive HR tech SaaS businesses, Cornerstone. We have a deep well of stu…

Module 2 overview

This module was more technical, and took us through a high-speed overview, beginning with linear and logistic regression, decision trees, ANNs, CNNs and concluding with LLMs. There was some economics, a sprinkling of regulation, econometrics, case studies and an excursion into quantum and a formal dinner in 4 days.

The module was led by Prof Bige Kahraman Alper. She is an expert in econometrics, and researches financial markets, for instance in the impact of ESG on financial performance. She is squarely in the realism school of AI, rather than the AGI is here tomorrow school. She argues forcefully that LLMs have their place, but they aren’t the only show in town. Picking the right technique is really important, and sometimes simpler but more robust methods are more appropriate. William of Ockham would approve.

I suspect there is a secret rule that statistics and econometrics professors must refer to Wald’s WWII research on selection bias at least once a module. But it does illustrate the importance of getting your sample selection right. A model is only as good as what goes into it (more about Bayes another day).

Various faculty from SBS and elsewhere at Oxford taught the other sessions, and there were a couple of guest speakers from industry. The takeaway from the industry sessions is implementing AI in production is a lot tougher than the hype would have us believe.

What impressed me with the statistics / ML / data professors (Bige, Siddharth Arora, Natalia Efremova, Agni Orfanoudaki, Shumiao Ouyang and Jeremy Large) was their ability to largely avoid the curse of knowledge. Lecturing a room full of 30-60 year old exec types with varying technical skills, many of whom are not afraid to ask questions or make comments is not easy. It is abundantly clear that the faculty really love their jobs and know their onions.

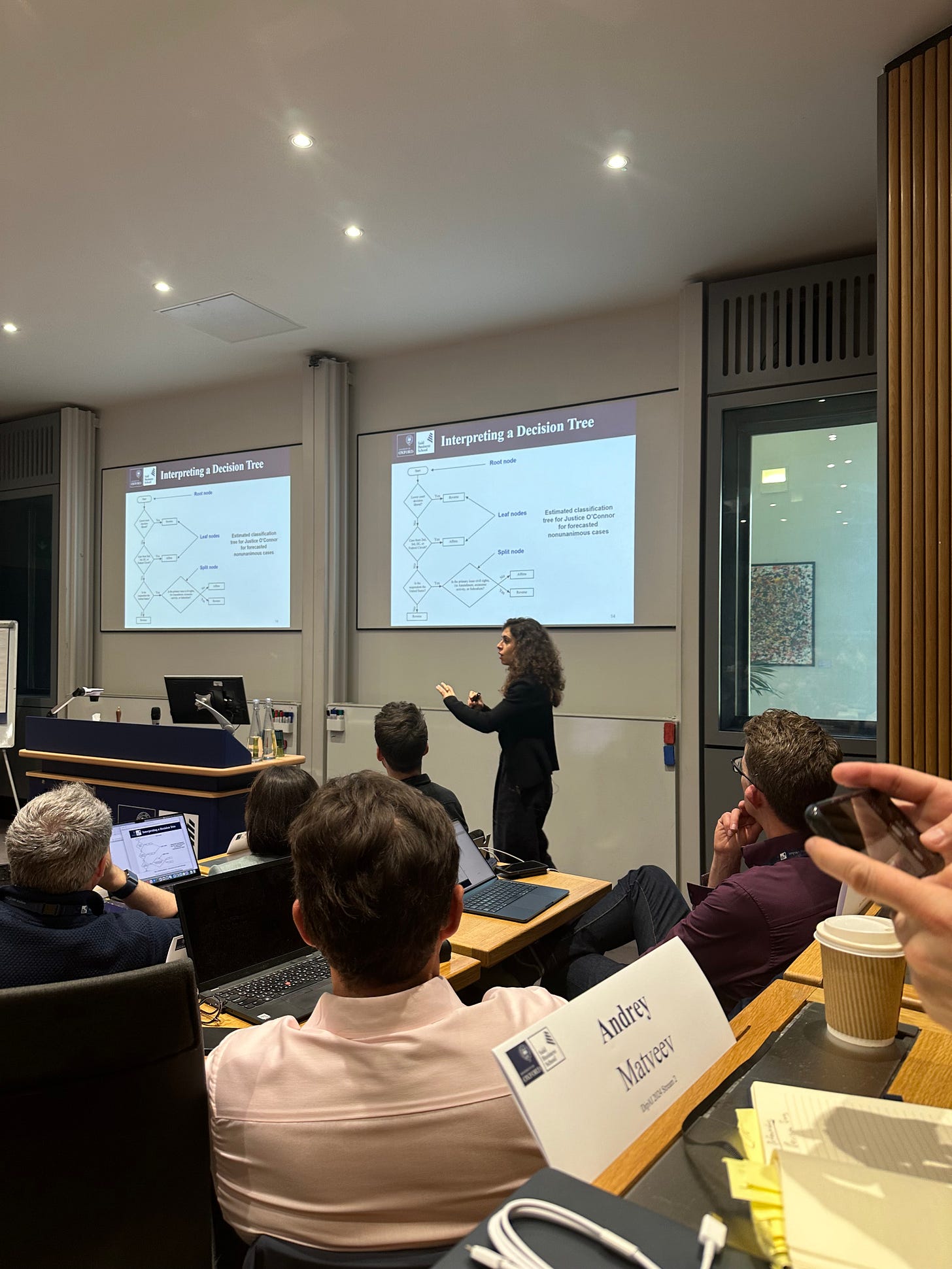

I found Agni Orfanoudaki’s session profoundly effective. Her impressive research focus is on medical AI, at the leading edge of ML research. I can see why she is so highly rated.

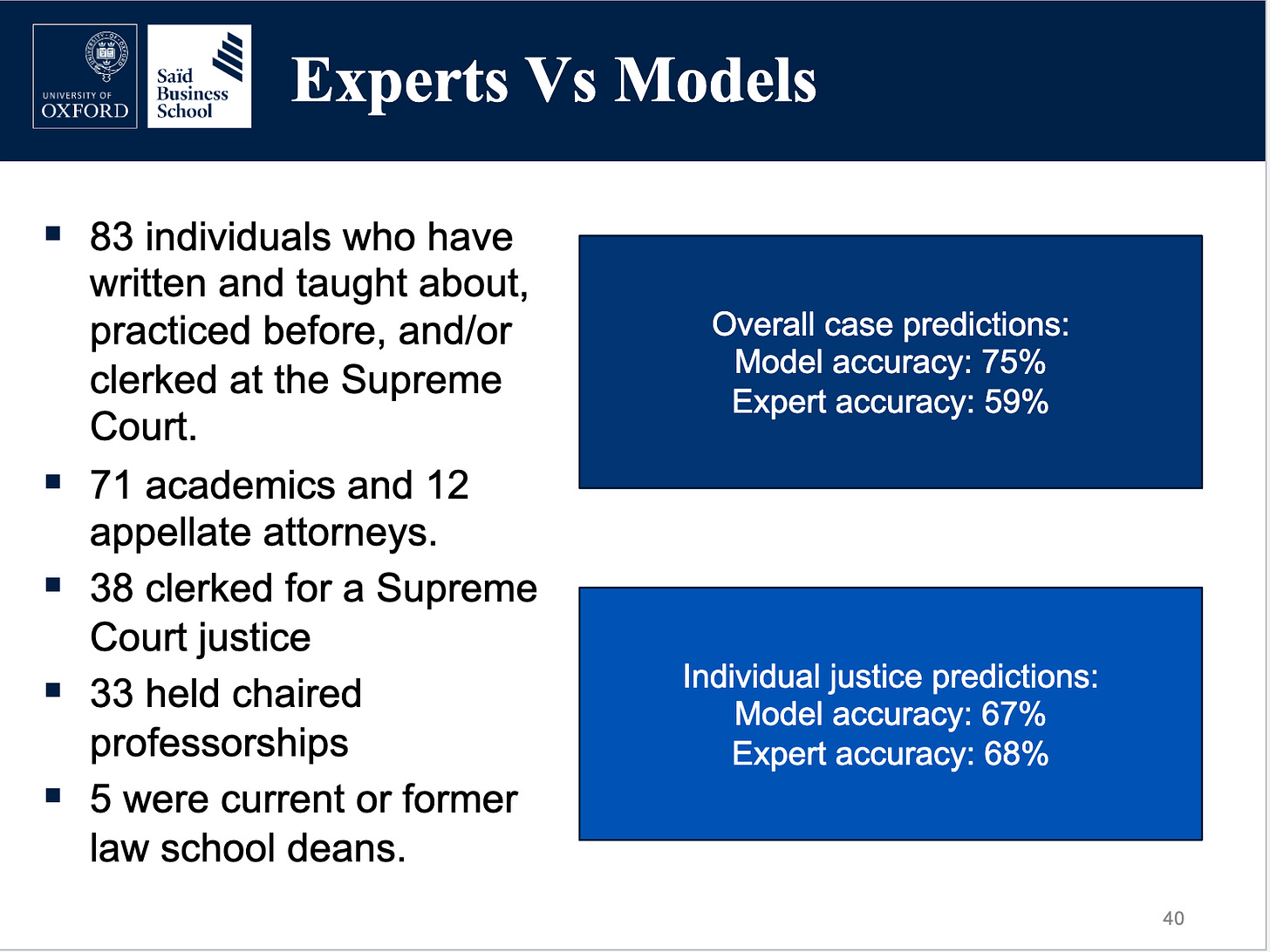

She used the example of predicting US Supreme Court judgements to explain decision trees and random forests. I was really impressed how she managed the class questions, and got through a lot of concepts with precise clarity. She took no prisoners.

It turns out that judgement prediction is a great use case for decision trees. It outperforms expert panels significantly. It is of course, by no means perfect. For more see this paper.

A morning of neural networks followed by an afternoon on convolutional networks is bit of a brain fry. I’d done an online course in ML methods via MIT earlier in the year, without that I would not have kept up. Every time I dig into the fundamentals of ML it becomes a bit clearer.

I’m never going to be a data scientist, but I understand their world a little better now. I’m slowly descending the gradient of my personal Dunning-Kruger curve. I’ll spend a bit of time over the next few weeks playing with Dataiku, python and a pen and paper, as nothing beats practice to reinforce learning. I have a lot more work to do on the fundamentals of LLMs, softmax, Fine Tuning, RAGs etc.

Bige also briefly explored the interplay between regulation and AI, through the lens of GDPR and the AI Act, and my old friend, externalities. I’m probably more positive than most on GDPR, I argue its failings are largely through half-arsed enforcement. The interplay between government policy, self-regulation etc continues to fascinate me. I hope we spend more time on this in a later module. There is a lot of great work going on at the Oxford Internet Institute, and perhaps we will get exposure to that. She also covered the troubling supply chain and market concentration issues on the hardware side of AI.

Felipe Thomasz, the course convenor, brought us back to the business model question. How will companies drive sustainable competitive advantage when technology commodifies so quickly? “Consider: is AI an internal capability of my firm? A dimension of competition for my product? My actual product?” AI is messing with established models of value creation and value capture. Fillipe has the habit of posing awkward questions. I leave his lectures feeling less comfortable than when I go in, which is a good thing.

Dr Kristin Gilkes (Global Partner at EY, and ex head of data science at JP Morgan) gave a dynamic talk about Quantum computing. I’d not realised how far this has progressed. She was exuberant about its possibilities, and of near term breakthroughs. Technologies can fester for decades before breaking through, and then suddenly they do. My mind is now opened to Quantum potential, as before I’d dismissed it as a science project. I don’t share her position on AGI’s nearness, but I do think at some point in the next few years Quantum will move from the academic lab into real mainstream business usage. The session also reminded me about how great IBM is at research.

If I were to change the agenda, I would given Jeremy Large a day, rather than a couple of hours, and I would have probably swapped out Python for Dataiku, Rapidminer or similar. I’d also ramp up the pre-work for those who don’t code. I would have loved to hear more on Siddharth’s work on energy consumption prediction, and Natalia’s work on satellite imagery for the wine industry. I would have liked more on the specifics of the Chinese AI industry from Shumiao Ouyang. I’ll head off and find their research.

The cohort is coming together nicely, there are some startup ideas brewing. The class debate was a lot of fun too. Being surrounded by people eager to learn and grow is a lovely joyous thing. My special thanks to our class reps, Meryem and Sebastian, you are champions. Joanna Francis from SBS holds it altogether so well in the background, nothing seems to faze her.

Does this expense and effort make me a better investor? Lacking an obvious control group it is hard to say, and will require a long time series, but I can now have a better level of conversion with founders about AI than I did before. I do have a lot of work to do on my own to process it all. I think of this course as creating the scaffolding (thanks Marc Ventresca for the imagery) upon which I will build my knowledge.

Thanks also to my data science friends who I bug constantly with my inane questions.

My biggest takeaway remains. Prediction is not causality.

As is my wont, I’ll end with some music. Play it really loud. Window shaking loud. Check out Kassandra’s story

“I suspect there is a secret rule that statistics and econometrics professors must refer to Wald’s WWII research on selection bias at least once a module. But it does illustrate the importance of getting your sample selection right.” - this made me laugh.

This was a helpful look at this type of program, thanks for sharing.

If you aren’t already familiar with Yohei Nakajima, I highly recommend following him. He’s a VC exploring AI through a hands on building approach. https://x.com/yoheinakajima I find his insights to be well ahead of market commercialization.

And I’m curious to read your future take on the benefits of GDPR!