In Auto-Sapients We Trust: Deployment and Adoption of AI in production.

Riffing on Rachel Botsman's lecture. Shift, Leap and Stack.

We could fill all our remaining days reading about the thrilling potential of AI. We can debate if we are at the top of the hype cycle, or even if the hype cycle applies. We can look for parallels in the rail industry, or the early Internet. We can try to predict AIs impact on how work will be a few years from now. We can pontificate if AGI is here, near or a chimera. These are giddy times.

My main takeaway from the final module of the Oxford PG-DipAI was that deployment of AI solutions isn’t going to be as easy as it was to invent the technology. My module summary here,

Rachel Botsman’s lecture

AI projects are going to ask all sorts of uncomfortable questions about data quality, change management, and readiness generally but more profoundly it requires our users to embrace a new form of trust. I keep coming back to Rachel Botsman’s lecture on Trust. She rather cleverly defines Trust as

A confident relationship with the unknown

She then went on discuss Trust Shifts.

Rachel explores these further in an article in Forbes.

Local and institutional trust don’t need an explanation here. Distributed Trust: an example of distributed trust is the internet, remote work, or perhaps blockchain.

Autosapient is an evocative neologism, combining automatic with “possessing or being able to possess wisdom.” I’m not completely comfortable with the term. It is overly anthropomorphic, but it is catchy.

To understand the disruption happening, it’s helpful think of trust like energy: It doesn’t get destroyed; it changes form. Local trust flowed sideways, directly from person to person. Institutional trust flowed upwards to leaders, experts, referees, and regulators. Distributed trust changed the flow back sideways but in ways and on a scale never possible before. Now, with the rapid advancement of AI, autosapient trust will flow through an AI agent.

When organizations deploy AI solutions, we are asking people to make a trust leap. When we automated processes, we gave software a clear command, and we relied on it to execute that command correctly.

With AI we are asking for a new form of trust, where we give agency to the AI.

(I’ve explored agency a bit in a previous post. More to come on it soon).

I’d like to build a bit on this. We aren’t yet at point where AI has genuine autosapience. For some decisions, it is very good, for others less so, for others worse than useless. The trust that we provide to AI must therefore be bounded. AIs level of autosapience is constrained by the state of the art of the technology, the quality of the data it has to decide with, the social norms of the users, and of course, regulation. Over time, these will change.

Successful AI adoption is going to hinge on users and economic buyers making the Trust Leap.

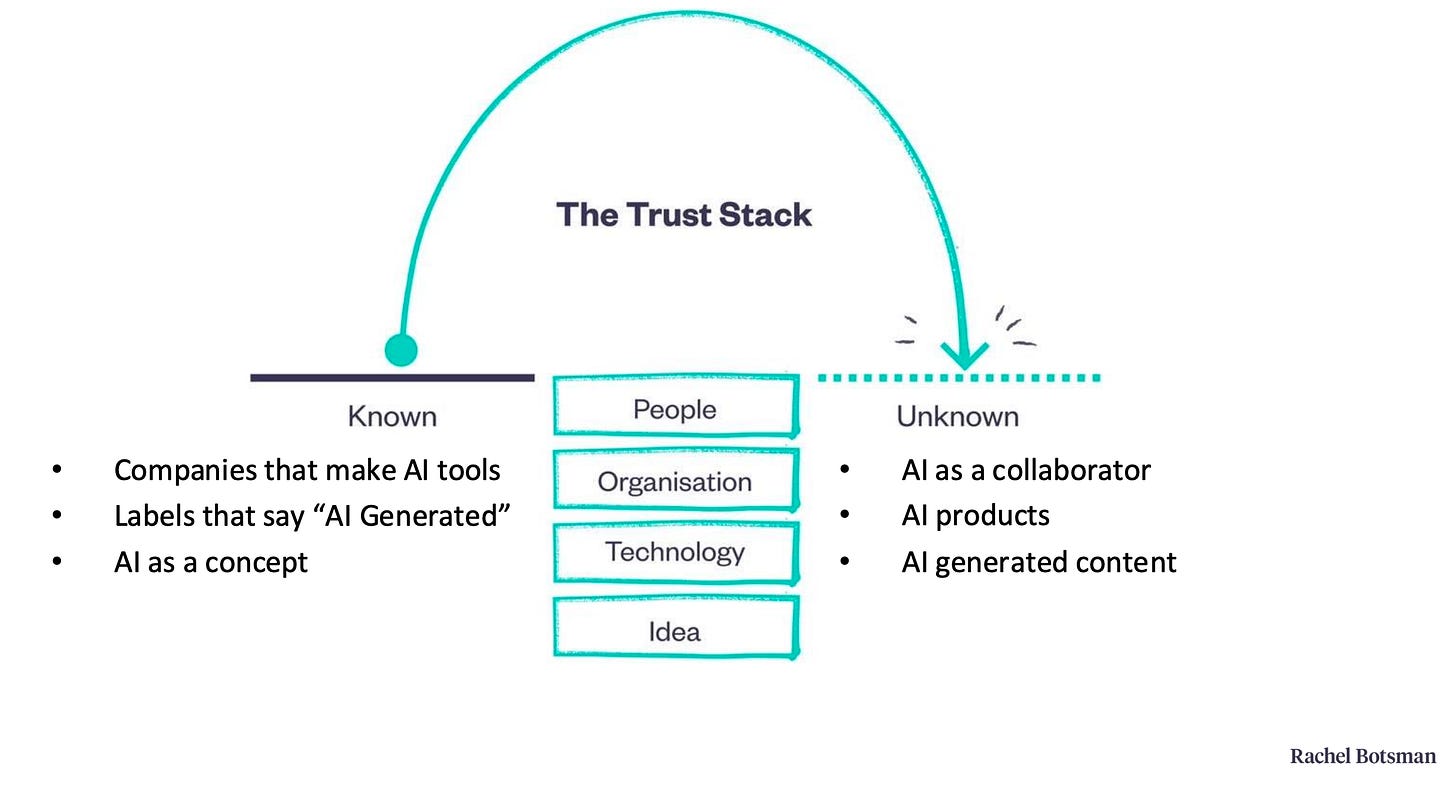

The final concept that Rachel explained is the trust stack. Her slides are brilliant in there aesthetic simplicity. I’d urge you to watch her stuff on youtube and subscribe to her substack.1

How does this impact our investment thesis?

Almost all the pitches we see today involve some element of AI. The vast majority of our portfolio companies have significant AI capabilities.

Applying AI to HR and work processes in production requires that you gain the trust of multiple parties. If you use AI in recruitment, it’s the applicants, the recruiters, the hiring managers and IT.

A lot of AI processing in HR going to be high risk as per the AI Act, and there are other regulatory considerations, such as labour laws and GDPR. Compliance with regulations is one way in which society signals trust, especially for the economic buyer.

So one of my new questions when evaluating investments is going to be what is your strategy to build trust with your various users and constituents?

More specifically, We will see a new distinct category of applications that assist AI vendors signal trustworthiness. In humans, trustworthiness is a behaviour. So to in software. We will see applications emerge that help users and other applications assess and enhance trustworthiness.

We aren’t the only VC firm thinking about this.

I really like this post from Jasper at Cherry. He explores why great models aren’t deployed in production.

“For investors, this means looking beyond technical capabilities to understanding how effectively companies can bridge the gap between AI potential and business reality. The next wave of successful AI companies won't win by building better models—they'll win by helping enterprises successfully deploy them.

I turn a lot to work of Gianni Giacomelli to explore the jagged edge of AI. This post from earlier this year explores the questions around human in the loop. I found this diagram very useful. I try to read everything he writes, you should too.

Gianni also pointed me to this paper. It explores how we might use Agents to evaluate agents. Agent-as-a-Judge: Evaluate Agents with Agents.

I’m intrigued by the concept of targeted friction. Sometimes we need to slow the AI down to encourage trust. Not all decisions need to be made quickly. We do talk glibly of the human in loop, but I we have work to do to figure out what makes a good human in loop process and outcome.

Transparency and explainability are very significant components of trustworthy applications. But the techniques to actually provide transparency and explainability in the context of LLMs is in its infancy. We will need to do a better job at explaining how and why AI has made the decision it has. Being able to assess fairness is going to be key in HR AI deployments.

If an AI tool suggests dismissing or demoting an employee, how will the manager trust it?

I’m convinced that the HR and employee related use cases are complex and a big enough market so that we will see specialist tools emerge to help HR vendors and users establish and nurture trust. It’s more than just risk assessment or standards compliance, important though these are. Risk and Trust are not analogous, but they are interrelated.

Done right, we think these tools are VC fundable business. Kombo has proven the need and opportunity for specialized HR integration tooling solutions, and we think that there will be a category of tools that enhance trust and therefore adoption in a similar vein.

If you are building applications that help vendors or users signal and enhance trust in systems that impact how we work we really want to talk to you.

As I usually do, I’ll end with a song. AI is going to get Messy, so here’s Messy by Lola Young. (Thanks Paul for the tip)

A couple of you have enjoyed a classical piece too. So here’s Jin-Kyung Kim with Bach.

Rachel has also looked at how work patterns are shifting, but this will need to wait for another post. Tetris v Minecraft needs more than a sentence.

Nice riff on Rachel's work. Collectively intelligent systems rely on trust for the signal to travel. That is true in organizations and society alike. With AI in the mix, we already see a development of distrust, which doesn't help at a time in which people don't trust institutions anyway. We should get ready. Part of the solution can indeed be the introduction of a little bit of friction. Humans in the loop are a little friction, as managers have been before them. Frictionless systems that you cannot trust, have been proven to become "unstabler" over time. BTW I think HR will be a lightning rod for societal distrust. Let's roll up sleeves and design human(s) in the loop as part of AI augmented collective intelligence (not AI only) systems.